column

You Gotta Serve Somebody

Ben Shai van der Wal

Ben Shai van der Wal is a writer, cultural critic, and philosopher. After his studies in philosophy and literary science (cum laude), he has been researching, teaching, and tutoring in a variety of institutions (Design Academy Eindhoven, Vrije Universiteit, Sandberg Institute, Akademie der Bildenden Künste in Nürnberg, Bezalel Academy of Arts). His ongoing research – The (T)error of Existence - delves into the entanglements between existentialism, psychoanalysis, and the politics of language.

column

You Wanna Know How We Got These Scars?

Writer, cultural critic, and philosopher Ben Shai van der Wal calls upon us to embrace our trickster-friend. A short essay about how society’s need for healing and fixing stands in the way of it.

Kalle Mattsson

Kalle Mattsson is a Stockholm based graphic designer, illustrator, animator and some sort of graphic comedian, which he would like to take this opportunity to apologize for.

essay

A devilish grin, a suburban carpark

Essayist and poet Charlie Jermyn makes a do-it-yourself pilgrimage through museum, muck, rain, wind and pub to visit Jan Steen’s old haunts

essay

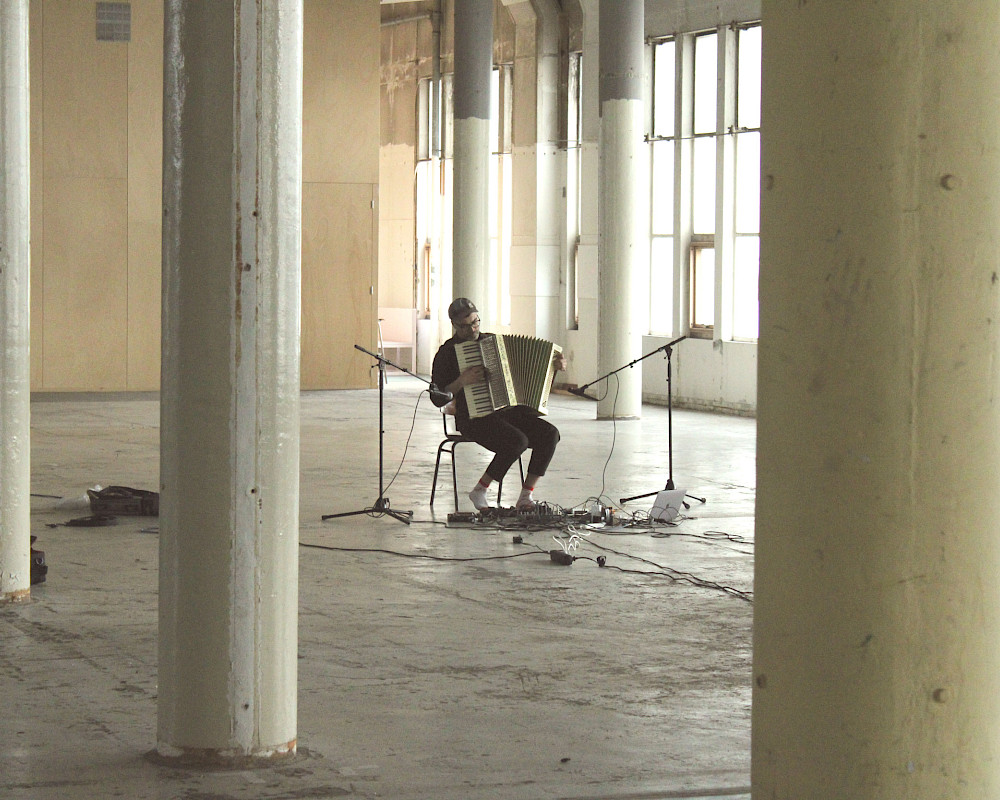

Two Nights of Noise at Tivoli

By recounting his experiences of two formative concerts at Tivoli, essayist and performer Charlie Jermyn provides a poetic account on the raw power of sound and the music of everyday life. He connects the origin of noise music to Italian Futurism, a movement fascinated with dynamism and constant innovation, and appreciates the ongoing vitality of aging cultural icons amidst the decay of our time.

column

You Wanna Know How We Got These Scars?

Writer, cultural critic, and philosopher Ben Shai van der Wal calls upon us to embrace our trickster-friend. A short essay about how society’s need for healing and fixing stands in the way of it.

8

min read

Siri’s default voice is that of a woman. Is this a sign of deep-rooted sexism? Absolutely. Does it help to substitute that voice by one mimicking a man? Nope.

AI - and specifically ChatGPT - is quickly becoming part of my daily petty fears. Every time a student hands in an impressive text, I wonder, just a tiny bit; could it be…nah, not this student. But let-me-check-real-quick. To be clear, I don’t like this suspicion, and yet I feel the possibility of being cheated very keenly. How dare someone try and bamboozle me!? And just like any good person that is part of the self-aggrandizing rhetoric of self-improvement my next thought about this is “What does this suspicion say about me?” Am I really that cynical about my fellow human beings? Or could it be that there is something about AI that instigates this attitude? Especially since there aren’t any reliable ChatGPT checkers yet. Interestingly, this moment of suspicion, doubt, lack of clarity also reveals a powerful potential: what is at stake when we don’t know who or what is speaking? Where does this desire to name the culprit come from? Why are we so enthralled with AI’s ability to potentially get one over on us? Who better to ask but AI?

It started with me using ChatGPT. The interface is recognizable enough, just a chat screen with a blinking vertical line waiting to be moved. “Hello” I typed, and it felt like flinging sound into a cave, testing to see if someone was there in the dark, some being of an undetermined image. We all know what happens next. A butler appears brimming with subservience and boundless optimism: “What can I do for you today?” “Could you write an interesting column about AI?” “Of course, I can help!” How may I serve you? What does master desire? What does master desire? ****It is so tempting, isn’t it? To inhabit that position. And perhaps here we find a pregnant moment, a moment where the potential to get an answer to a relatively innocuous query is preceded by the relationship necessary for the engagement, i.e. a servant needs a master. Indeed, a lot of new technology has been thought of within the frame of a master-servant relationship. For instance, Siri’s default voice is that of a woman. Is this a sign of deep-rooted sexism? Absolutely. Does it help to substitute that voice by one mimicking a man? Nope. Precisely because this proliferates the master-servant relationship as inevitable. Similarly, AI presents itself as a harmless tool. And I am always suspicious of tools ‘presenting’ in any kind of way.

Psychoanalysis can help with unpacking how deep this relationship is embedded in our way of thinking. For Jaqcues Lacan, language, conventions and social relations are part of a register called the symbolic order. Your parents, environment, culture, law and everything that can be expressed in language - and there is nothing beyond language - are part of this register; it forms a participatory subject out of the gooey blob of infancy. The ability to name is the primary force in making meaning and language is its tool. Imagine an infant gurgling and bubbling and hissing and tsking and making all the sounds the human body is able to produce. We then correct the baby; that is “mama”. The baby then goes “mama” and we rejoice! Now, usually we tend to think of this process as learning how to speak. However, we might also consider that the baby unlearns many potentially meaningful sounds because they hold no meaningful relationship within their environment; i.e. the environment decides what is meaningful, and how they are meaningful, by naming things. In a very real way, the baby also unlearns a boundless potentiality.

So, this order imposes what sounds are acceptable for effective relationships to transpire. We have all learned to speak by unlearning our potential for new relationships, because what even counts as a relationship is contingent on learning the proper signifiers. In other words, our first rebuke is being told repeatedly that a dog is a dog, the price and the gift of participating in this symbolic order. If, to the vigilant reader, this process is starting to sound familiar; yes, AI might as well be considered as learning to speak - to name - and in that process it is creating the same relationships: a servant demands a master and vice versa. To our readers of Hegelian dialectics: yes, it is a chokehold where neither gets to imagine any other relationship but the one you are in.

As we have learned to name, we have also learned that naming is the most important quality in having power. We are very adept at safeguarding this position. We tend to think both our faults and virtues are simply mirrored by AI, which suggests that the privilege of having faults and virtues is still very much exclusively ours. In a March 2023 article in the Guardian, James Bridle argues that if any kind of bias can be attributed to AI it is not because it has any inherent ideological preferences but “because it’s inherently stupid. It has read most of the internet, and it knows what human language is supposed to sound like, but it has no relation to reality whatsoever” (1). Oh, how wonderful it is to be the arbiter of reality! Does this rhetoric sound familiar? Someone that is not like me may learn to mimic me, but really it just shows how superior I am, in fact “the notion that it is actually intelligent could be actively dangerous”. Some ugly colonial connotations may be readily available here. In this scenario we see AI as nothing but a tool, a thing that is not sentient, smart, alive, or even allowed categories that are reserved for ‘real’ people. To be sure, Bridle’s claim that AI is not as impressive as some of us fear should already be understood as a response to a threat; what if we are also merely mimicking the language we are taught? We have unlearned our potential growing up and as a consequence have gained a place in the world. Could AI go through a similar process?

Conversely, Elon warns us of the Singularity: the moment where humanity and machines converge and become one being. He argues that AI’s power to make us obsolete as producers of truth and meaning is inevitable: “it’s simply a matter of bandwidth”. The computational power is so immense that all we limited-by-our-biological-boundaries sacks of meat can do is merge with this greater power by using Elon’s patented Neuralink. A god-like entity is evoked and clearly we are not equipped to resist. The Borg appear in deep space and resistance is futile. In this scenario, we are at the mercy of the symbolic order, the children who simply need to learn how to name properly so that we may tap into our own true potential. We may gather around the campfire and tell stories about how the world has gone to shit, or we may eagerly await with pious mumbles the moment of ascension. Either way, AI is teaching us how to speak properly and, with that, establishes the promise of growing up.

But maybe this choice of either/or is misleading altogether and a kind of paradox is being enacted; while AI is docile, helpful, in service of, it is also an entity that more and more produces discourse and therefore could be understood as a kind of superior servant and a servile superior. One that both undermines as well as solidifies our position; or, by solidifying our position it undermines it. It is ‘stupid’ because it merely mimics human discourse and it demarcates the possibility of a more-than-human discourse. Consider; you could by now have noticed - shrewd reader that you are - a pattern either in language or rhetoric in this text. A slight doubt might creep in…could it be…nah, this text isn’t that good. But maybe that’s on purpose…

Before you go and try to find out, I urge you to consider why whether this text is written by me or by AI matters to you? You are invited to stay in this potential as long as you can.

1. James Bridle, ‘The stupidity of AI,’ The Guardian 16.03.2023.